Gathering Data Using Arc Collector

Field Activity #7

Introduction-

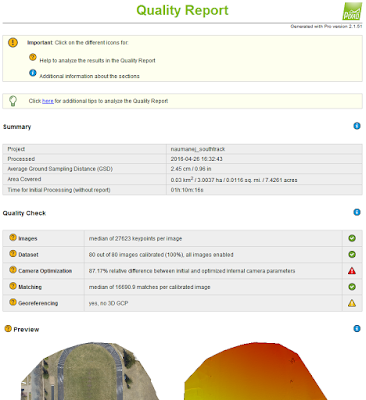

For the 7th field activity we were asked to pose a question, create a database, and collect and project data that was collect to try and answer the question that was posed. This would be the first lab that we create and use our own database to answer a question that we came up with. The question I wanted to find out was: which faculty parking lot on campus has the most full sized trucks parked in them. When thinking of this question many thoughts went through my head. Is there enough trucks on campus to validate my question, which parking lot would have the most, would weather pose a factor, and how could this data be used to benefit insurance companies.

When it came to the creation of the database there were a few guidelines that had to be followed. In the feature class there had to be at least three fields to enter attribute data, one of the fields should be a text field for notes, one should be a floating point or integer, and one should be a category field. With following these guidelines along with properly setting up the database, it would be easier to compile the data in the field. Having proper database design is essential to keeping data organized and valid while collecting the data. By properly creating a database, collection time of data can be cut down on. Another thing to keep in mind is that it's not always the creator collecting the data. By having proper alias fields for the collection crew, one can make sure that they know what the collector means during the collection of the data. It is also important to have a notes field so the collector can document anything they believe that the database creator had missed.

Study Area-

Methods-

The data for this project was collected on a Friday from 2:30 pm till 3:30 pm. Since sections of these parking lots open to the "no pass" vehicles at 3 pm, I wanted to see how many trucks would be in these sections before and after 3 pm. I also knew that by collecting this data around these times that there wouldn't be an insane amount of trucks in either of the parking lots, but was surprised by the data that I found. Before the collection of the data I believed that Davies parking lot would have more data collected than the Hibbard lot. The Davies lot is much bigger so I figured this would come into play for more data. I was wrong and Hibbard actually had more trucks in the lot.

Another problem I had when collecting the data for this project question was maintaining the integrity of the location of the points. When collecting the data I wanted to stand directly behind the trucks allowing for all my points to be consistent. Although I stood behind the trucks directly while gathering the points, another problem then came into play. Arc Collector is not all that accurate, in figure one you can see what I mean.

|

| Fig. 1- Location of three points collected using Arc Collector that are not accurate. |

Figure 1 displays three different points from data collected in the Davies parking lot. Clearly there is not trucks parked on the sidewalk or on the grass, but when using Arc Collector since it is not all that accurate at times I was left with these points. This leaves some of my data skewed, because at other times as in figure 2, the points collected are exactly where I was during the collection of the points.

|

| Fig. 2- Location of three points that are accurate with Arc Collector. |

Figure 2 displays points that were collected using Arc Collector that were accurate to where I was during the collection process. Although these are accurate, and the points in figure one are not, this leaves my data integrity up in the air. One way to prevent this would be to use a more accurate data collection process. Another way that this could have been avoided was to use a more accurate location fix by being connected to WiFi.

Results-

The data that was collected for each of the parking lots was a shock to me. As I mentioned before, I first believe that Davies lot would have more full sized trucks considering that it was a larger parking lot and seems to have more traffic flow. With the more traffic flow, I also believed that there would be more accidents in this lot due to having large vehicles parked there. Having a larger vehicle would be prone to dinging doors of other cars, or side swiping them when trying to park/ leave a parking space. If data like this would be presented to an insurance company, they could potentially come up with a policy stating that large trucks could only park in designated parking spots. Although this may be unpractical, it would be a way for insurance companies to save money potentially. Figure 3 shows all the full sized trucks parked in the Davies parking lot.

|

| Fig. 3- All the full sized trucks parked in the Davies parking lot. |

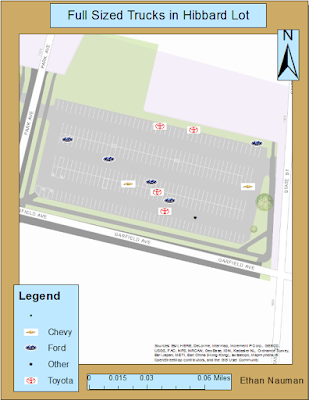

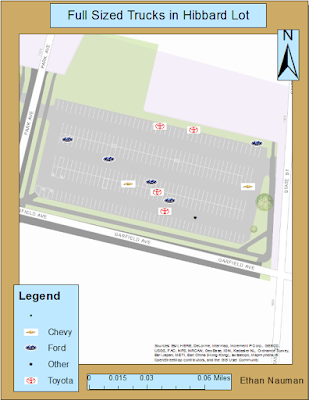

After the collection of the data in the Davies lot was complete, I moved onto the Hibbard lot. The Hibbard lot is located right on a busy street and tends to only allow faculty parking until 6 pm. Since only faculty could park here, I believe that this would have a factor in not having as much traffic and not as much full sized trucks in this lot. Figure 4 shows all the trucks parked in the Hibbard lot.

|

| Fig. 4- All the full sized trucks parked in the Hibbard lot. |

After looking at the data collected it is easy to see that the Hibbard lot had more full sized trucks parked there between 2:30 and 3:30 pm. This shows that there was more traffic during this time of the day at the Hibbard lot for faculty members. The final map displayed in figure 5 shows both the Davies and Hibbard lots and all the trucks parked in them.

|

| Fig. 5- Davies and Hibbard parking lots. |

Although I only focused on the Davies and Hibbard parking lots, there are also other lots on lower campus that can/ do have trucks parked in them. Some of these lots are meter lots, and some of them are small and have campus work trucks parked in them. It would be interesting to expand my research question to incorporate these lots and to find and determine the consequences that these large vehicles could have in these lots as well.

Conclusion-

Without proper database design it would have been difficult to gather all the data I needed in the field. The database I created allowed for me to have a drop down of the make of the truck and how many trucks there were. I also had a notes field and incorporated an estimated year field. I could make lots of different maps incorporating all these fields together, but focused on how many trucks were in each lot based off of my question. One thing I would have done differently for the question would be to incorporate SUV's and other large four door vehicles. Trucks are not the only large vehicle that has a problem in tight spaced parking lots leading to accidents and dings and scratches. By expanding and cross examining the information from insurance companies, I believe that the conclusion would be to have a section just for trucks and large vehicles to park in some parking lots. This could lead to fewer insurance claims and make everyone happy. There is nothing worse than coming out of class and seeing that you have to squeeze between cars just to get your driver door open eight inches to mutate into an octopus to get into the drivers seat.